#Sensors in Autonomous Vehicles

Explore tagged Tumblr posts

Text

Sensors in Autonomous Vehicles

Sensors in Autonomous Vehicles

Introduction

The entire discussion on Sensors in Autonomous Vehicles consists of one major point – will the vehicle’s brain (i.e., the computer) be able to make decisions just like a human brain does?

Now whether or not a computer can make these decisions is an altogether different topic, but it is just as important for an automotive company working on self-driving technology to provide the computer with the necessary and sufficient data to make decisions.

This is where sensors, and in particular, their integration with the computing system comes into the picture.

Types of Sensors in Autonomous Vehicles:

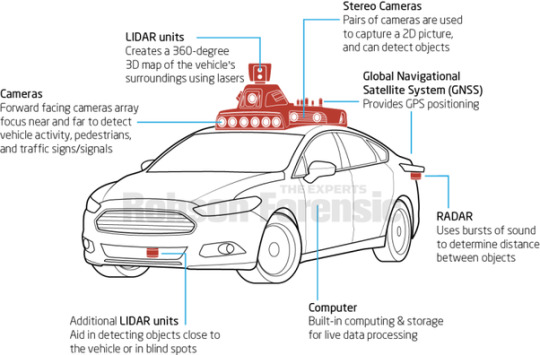

There are three main types of Sensors in Autonomous Vehicles used to map the environment around an autonomous vehicle – vision-based (cameras), radio-based (radar), and light/laser-based (LiDAR).

These three types of Sensors in Autonomous Vehicles have been explained below in brief:

Cameras

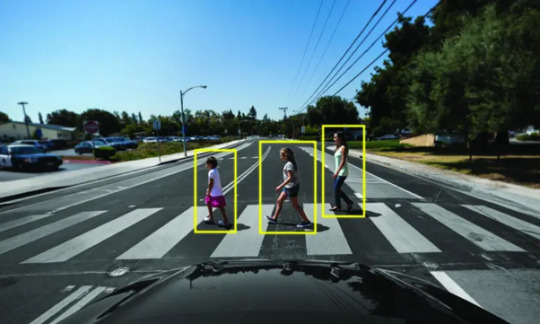

High-resolution video cameras can be installed in multiple locations around the vehicle’s body to gain a 360° view of the surroundings of the vehicle. They capture images and provide the data required to identify different objects such as traffic lights, pedestrians, and other cars on the road.

Source: How Does a Self-Driving Car See? | NVIDIA Blog

The biggest advantage of using data from high-resolution cameras is that objects can be accurately identified and this is used to map 3D images of the vehicle’s surroundings. However, these cameras don’t perform as accurately in poor weather conditions such as nighttime or heavy rain/fog.

Types of vision-based sensors used:

Monocular vision sensor

Monocular vision Sensors in Autonomous vehicles make use of a single camera to help detect pedestrians and vehicles on the road. This system relies heavily on object classification, meaning it will detect classified objects only. These systems can be trained to detect and classify objects through millions of miles of simulated driving.

When it classifies an object, it compares the size of this object with the objects it has stored in memory. For example, let’s say that the system has classified a certain object as a truck.

If the system knows how big a truck appears in an image at a specific distance, it can compare the size of the new truck and calculate the distance accordingly.

A test scene with detected classified (blue bounding box) and unclassified objects (red bounding box). Source: https://www.foresightauto.com/stereo-vs-mono/

But if the system encounters an object which it is unable to classify, that object will go undetected. This is a major concern for autonomous system developers.

Stereo vision sensor

A stereo vision system consists of a dual-camera setup, which helps in accurately measuring the distance to a detected object even if it doesn’t recognize what the object is.

Since the system has two distinct lenses, it functions as a human eye and helps perceive the depth of a certain object.

Since both lenses capture slightly different images, the system can calculate the distance between the object and the camera based on triangulation.

Sensors in Autonomous Vehicles

Source: https://sites.tufts.edu/eeseniordesignhandbook/files/2019/05/David_Lackner_Tech_Note_V2.0.pdf

Radar Sensors in Autonomous Vehicles

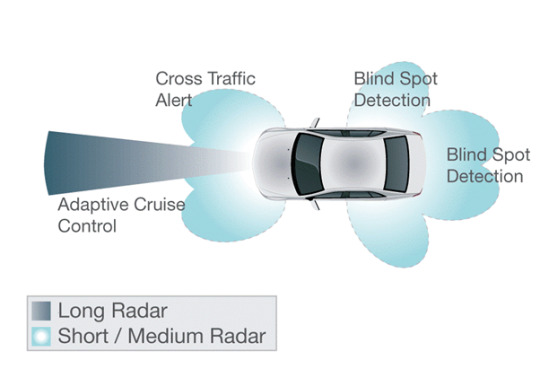

Radar Sensors in Autonomous Vehicles make use of radio waves to read the environment and get an accurate reading of an object’s size, angle, and velocity.

A transmitter inside the sensor sends out radio waves and based on the time these waves take to get reflected back to the sensor, the sensor will calculate the size and velocity of the object as well as its distance from the host vehicle.

Sensors in Autonomous Vehicles

Source: https://riversonicsolutions.com/self-driving-cars-expert-witness-physics-drives-the-technology/

Radar sensors have been used previously in weather forecasting as well as ocean navigation. The reason for this is that it performs quite consistently across a varying set of weather conditions, thus proving to be better than vision-based sensors.

However, they are not always accurate when it comes to identifying a specific object and classifying it (which is an important step in making decisions for a self-driving car).

RADAR sensors can be classified based on their operating distance ranges:

Short Range Radar (SRR): (0.2 to 30m) – The major advantage of short-range radar sensors is to provide high resolution to the images being detected. This is of utmost importance, as a pedestrian standing in front of a larger object may not be properly detected in low resolutions.

Medium Range Radar (MRR): 30 to 80m

Long-Range Radar (LRR): (80m to more than 200m) – These sensors are most useful for Adaptive Cruise Control (ACC) and highway Automatic Emergency Braking (AEB) systems.

LiDAR sensors

LiDAR (Light Detection and Ranging) offers some positives over vision-based and radar sensors. It transmits thousands of high-speed laser light waves, which when reflected, give a much more accurate sense of a vehicle’s size, distance from the host vehicle, and its other features.

Many pulses create distinct point clouds (a set of points in 3D space), which means a LiDAR sensor will give a three-dimensional view of a certain object as well.

Sensors in Autonomous Vehicles

Source: How LiDAR Fits Into the Future of Autonomous Driving

LiDAR sensors often detect small objects with high precision, thus improving the accuracy of object identification. Moreover, LiDAR sensors can be configured to give a 360° view of the objects around the vehicle as well, thus reducing the requirement for multiple sensors of the same type.

The drawback, however, is that LiDAR sensors have a complex design and architecture, which means that integrating a LiDAR sensor into a vehicle can increase manufacturing costs multifold.

Moreover, these sensors need high computing power, which makes them difficult to be integrated into a compact design.

Most LiDAR sensors use a 905 nm wavelength, which can provide accurate data up to 200 m in a restricted field of view. Some companies are also working on 1550 nm LiDAR sensors, which will have even better accuracy over a longer range.

Ultrasonic Sensors

Ultrasonic Sensors in Autonomous Vehicles are mostly used in low-speed applications in automobiles.

Most parking assist systems have ultrasonic sensors, as they provide an accurate reading of the distance between an obstacle and the car, irrespective of the size and shape of the obstacle.

The ultrasonic sensor consists of a transmitter-receiver setup. The transmitter sends ultrasonic sound waves and based on the time period between transmission of the wave and its reception, the distance to the obstacle is calculated.

Sensors in Autonomous Vehicles

Source: Ultrasonic Sensor working applications and advantages

The detection range of ultrasonic sensors ranges from a few centimetres to 5m, with an exact measurement of distance.

They can also detect objects at a very small distance from the vehicle, which can be extremely helpful while parking your vehicle.

Ultrasonic sensors can also be used to detect conditions around the vehicle and help with V2V (vehicle-to-vehicle) and V2I (vehicle-to-infrastructure) connectivity.

Sensor data from thousands of such connected vehicles can help in building algorithms for autonomous vehicles and offers reference data for several scenarios, conditions and locations.

Challenges in handling sensors

The main challenge in handling sensors is to get an accurate reading from the sensor while filtering out the noise. Noise means the additional vibrations or abnormalities in a signal, which may reduce the accuracy and precision of the signal.

It is important to tell the system which part of the signal is important and which needs to be ignored. Noise filtering is a set of processes that are performed to remove the noise contained within the data.

Noise Filtering

The main cause for uncertainty being generated through the use of individual sensors is the unwanted noise or interference in the environment.

Of course, any data picked up by any sensor in the world consists of the signal part (which we need) and the noise part (which we want to ignore). But the uncertainty lies in not understanding the degree of noise present in any data.

Normally, high-frequency noise can cause a lot of distortions in the measurements of the sensors. Since we want the signal to be as precise as possible, it is important to remove such high-frequency noise.

Noise filters are divided into linear (e.g., simple moving average) and non-linear (e.g., median) filters. The most commonly used noise filters are:

Low-pass filter – It passes signals with a frequency lower than a certain cut-off frequency and attenuates signals with frequencies higher than the cut-off frequency.

High-pass filter – It passes signals with a frequency higher than a certain cut-off frequency and attenuates signals with frequencies lower than the cut-off frequency.

Other common filters include Kalman filter, Recursive Least Square (RLS), Least Mean Square Error (LMS).

Autonomous Vehicle Feature Development at Dorle Controls

At Dorle Controls, we strive to provide bespoke software development and integration solutions for autonomous vehicles as well.

This includes individual need-based application software development as well as developing the entire software stack for an autonomous vehicle. Write to [email protected] to know more about our capabilities in this domain.

Boost Your Knowledge More

0 notes

Text

Smart Traction: Intelligent All-Wheel Drive Market Accelerates to $49.3 Billion by 2030

The intelligent all-wheel drive market is experiencing remarkable momentum as automotive manufacturers integrate advanced electronics and artificial intelligence into drivetrain systems to deliver superior performance, safety, and efficiency. With an estimated revenue of $29.9 billion in 2024, the market is projected to grow at an impressive compound annual growth rate (CAGR) of 8.7% from 2024 to 2030, reaching $49.3 billion by the end of the forecast period. This robust growth reflects the automotive industry's evolution toward smarter, more responsive drivetrain technologies that adapt dynamically to changing road conditions and driving scenarios.

Evolution Beyond Traditional All-Wheel Drive

Intelligent all-wheel drive systems represent a significant advancement over conventional mechanical AWD configurations, incorporating sophisticated electronic controls, multiple sensors, and predictive algorithms to optimize traction and handling in real-time. These systems continuously monitor wheel slip, steering input, throttle position, and road conditions to make instantaneous adjustments to torque distribution between front and rear axles, and increasingly between individual wheels.

Unlike traditional AWD systems that react to wheel slip after it occurs, intelligent systems use predictive algorithms and sensor data to anticipate traction needs before wheel slip begins. This proactive approach enhances vehicle stability, improves fuel efficiency, and provides superior performance across diverse driving conditions from highway cruising to off-road adventures.

Consumer Demand for Enhanced Safety and Performance

Growing consumer awareness of vehicle safety and performance capabilities is driving increased demand for intelligent AWD systems. Modern drivers expect vehicles that can confidently handle adverse weather conditions, challenging terrain, and emergency maneuvering situations. Intelligent AWD systems provide these capabilities while maintaining the fuel efficiency advantages of front-wheel drive during normal driving conditions.

The rise of active lifestyle trends and outdoor recreation activities has increased consumer interest in vehicles capable of handling diverse terrain and weather conditions. Intelligent AWD systems enable crossovers and SUVs to deliver genuine all-terrain capability without compromising on-road refinement and efficiency.

SUV and Crossover Market Expansion

The global shift toward SUVs and crossover vehicles is a primary driver of intelligent AWD market growth. These vehicle segments increasingly offer AWD as standard equipment or popular options, with intelligent systems becoming key differentiators in competitive markets. Manufacturers are positioning advanced AWD capabilities as premium features that justify higher trim levels and increased profitability.

Luxury vehicle segments are particularly driving innovation in intelligent AWD technology, with features such as individual wheel torque vectoring, terrain-specific driving modes, and integration with adaptive suspension systems. These advanced capabilities create compelling value propositions for consumers seeking both performance and versatility.

Electric Vehicle Integration Opportunities

The electrification of automotive powertrains presents unique opportunities for intelligent AWD systems. Electric vehicles can implement AWD through individual wheel motors or dual-motor configurations that provide precise torque control impossible with mechanical systems. Electric AWD systems offer instant torque delivery, regenerative braking coordination, and energy management optimization.

Hybrid vehicles benefit from intelligent AWD systems that coordinate internal combustion engines with electric motors to optimize performance and efficiency. These systems can operate in electric-only AWD mode for quiet, emissions-free driving or combine power sources for maximum performance when needed.

Advanced Sensor Technology and Data Processing

Modern intelligent AWD systems incorporate multiple sensor technologies including accelerometers, gyroscopes, wheel speed sensors, and increasingly, cameras and radar systems that monitor road conditions ahead of the vehicle. Machine learning algorithms process this sensor data to predict optimal torque distribution strategies for varying conditions.

GPS integration enables intelligent AWD systems to prepare for upcoming terrain changes, weather conditions, and road characteristics based on location data and real-time traffic information. This predictive capability allows systems to optimize performance before challenging conditions are encountered.

Manufacturer Competition and Innovation

Intense competition among automotive manufacturers is driving rapid innovation in intelligent AWD technology. Brands are developing proprietary systems with unique characteristics and branding to differentiate their vehicles in crowded markets. This competition accelerates technological advancement while providing consumers with increasingly sophisticated options.

Partnerships between automotive manufacturers and technology companies are creating new capabilities in intelligent AWD control systems. Artificial intelligence, cloud computing, and advanced materials are being integrated to create more responsive and efficient systems.

Regional Market Dynamics

Different global markets exhibit varying demand patterns for intelligent AWD systems based on climate conditions, terrain characteristics, and consumer preferences. Northern markets with harsh winter conditions show strong demand for advanced traction systems, while emerging markets focus on systems that provide value-oriented performance improvements.

Regulatory requirements for vehicle stability and safety systems in various regions influence the adoption of intelligent AWD technology. Standards for electronic stability control and traction management create baseline requirements that intelligent AWD systems can exceed.

Manufacturing and Cost Considerations

The increasing sophistication of intelligent AWD systems requires significant investment in research and development, manufacturing capabilities, and supplier relationships. However, economies of scale and advancing semiconductor technology are helping to reduce system costs while improving performance and reliability.

Modular system designs enable manufacturers to offer different levels of AWD sophistication across vehicle lineups, from basic intelligent systems in entry-level models to advanced torque-vectoring systems in performance vehicles.

#intelligent all-wheel drive#smart AWD systems#advanced traction control#automotive drivetrain technology#AWD market growth#intelligent torque distribution#electronic stability control#vehicle dynamics systems#all-terrain vehicle technology#automotive safety systems#performance AWD#electric vehicle AWD#hybrid drivetrain systems#torque vectoring technology#predictive AWD control#adaptive traction systems#automotive electronics#drivetrain electrification#active differential systems#terrain management systems#AWD coupling technology#automotive sensors#machine learning automotive#AI-powered drivetrain#connected vehicle systems#autonomous driving technology#SUV market growth#crossover vehicle technology#premium automotive features#automotive innovation trends

0 notes

Text

#data#sensor#artificialintelligence#selfdriving#cars#autonomous#vehicles#powerelectronics#powersemiconductor#powermanagement

0 notes

Text

The global autonomous vehicle sensor compatible coating market is likely to showcase robust growth during the forecast period.

0 notes

Text

What We Learned from Flying a Helicopter on Mars

The Ingenuity Mars Helicopter made history – not only as the first aircraft to perform powered, controlled flight on another world – but also for exceeding expectations, pushing the limits, and setting the stage for future NASA aerial exploration of other worlds.

Built as a technology demonstration designed to perform up to five experimental test flights over 30 days, Ingenuity performed flight operations from the Martian surface for almost three years. The helicopter ended its mission on Jan. 25, 2024, after sustaining damage to its rotor blades during its 72nd flight.

So, what did we learn from this small but mighty helicopter?

We can fly rotorcraft in the thin atmosphere of other planets.

Ingenuity proved that powered, controlled flight is possible on other worlds when it took to the Martian skies for the first time on April 19, 2021.

Flying on planets like Mars is no easy feat: The Red Planet has a significantly lower gravity – one-third that of Earth’s – and an extremely thin atmosphere, with only 1% the pressure at the surface compared to our planet. This means there are relatively few air molecules with which Ingenuity’s two 4-foot-wide (1.2-meter-wide) rotor blades can interact to achieve flight.

Ingenuity performed several flights dedicated to understanding key aerodynamic effects and how they interact with the structure and control system of the helicopter, providing us with a treasure-trove of data on how aircraft fly in the Martian atmosphere.

Now, we can use this knowledge to directly improve performance and reduce risk on future planetary aerial vehicles.

Creative solutions and “ingenuity” kept the helicopter flying longer than expected.

Over an extended mission that lasted for almost 1,000 Martian days (more than 33 times longer than originally planned), Ingenuity was upgraded with the ability to autonomously choose landing sites in treacherous terrain, dealt with a dead sensor, dusted itself off after dust storms, operated from 48 different airfields, performed three emergency landings, and survived a frigid Martian winter.

Fun fact: To keep costs low, the helicopter contained many off-the-shelf-commercial parts from the smartphone industry - parts that had never been tested in deep space. Those parts also surpassed expectations, proving durable throughout Ingenuity’s extended mission, and can inform future budget-conscious hardware solutions.

There is value in adding an aerial dimension to interplanetary surface missions.

Ingenuity traveled to Mars on the belly of the Perseverance rover, which served as the communications relay for Ingenuity and, therefore, was its constant companion. The helicopter also proved itself a helpful scout to the rover.

After its initial five flights in 2021, Ingenuity transitioned to an “operations demonstration,” serving as Perseverance’s eyes in the sky as it scouted science targets, potential rover routes, and inaccessible features, while also capturing stereo images for digital elevation maps.

Airborne assets like Ingenuity unlock a new dimension of exploration on Mars that we did not yet have – providing more pixels per meter of resolution for imaging than an orbiter and exploring locations a rover cannot reach.

Tech demos can pay off big time.

Ingenuity was flown as a technology demonstration payload on the Mars 2020 mission, and was a high risk, high reward, low-cost endeavor that paid off big. The data collected by the helicopter will be analyzed for years to come and will benefit future Mars and other planetary missions.

Just as the Sojourner rover led to the MER-class (Spirit and Opportunity) rovers, and the MSL-class (Curiosity and Perseverance) rovers, the team believes Ingenuity’s success will lead to future fleets of aircraft at Mars.

In general, NASA’s Technology Demonstration Missions test and advance new technologies, and then transition those capabilities to NASA missions, industry, and other government agencies. Chosen technologies are thoroughly ground- and flight-tested in relevant operating environments — reducing risks to future flight missions, gaining operational heritage and continuing NASA’s long history as a technological leader.

youtube

You can fall in love with robots on another planet.

Following in the tracks of beloved Martian rovers, the Ingenuity Mars Helicopter built up a worldwide fanbase. The Ingenuity team and public awaited every single flight with anticipation, awe, humor, and hope.

Check out #ThanksIngenuity on social media to see what’s been said about the helicopter’s accomplishments.

youtube

Learn more about Ingenuity’s accomplishments here. And make sure to follow us on Tumblr for your regular dose of space!

5K notes

·

View notes

Photo

Sim4CAMSens project receives UK grant to advance AV perception sensors The latest round of grants from the Centre for Connected and Autonomous Vehicles (CCAV) in the UK includes a £2m ($2.49m) grant to the Sim4CAMSens project, which develops perception sensors for autonomous vehicles.The project is run by a consortium including Claytex, rFpro, Syselek, Oxford RF, WMG, National Physical Laboratory, Compound Semiconductor Applications Catapult and AESIN. It will develop a sensor evaluation framework that spans modeling, simulation and physical testing, and will involve the creation of new sensor models, improved noise models, new material models and new test methods to allow ADAS and sensor developers to accelerate their development.Continue reading Sim4CAMSens project receives UK grant to advance AV perception sensors at ADAS & Autonomous Vehicle International. https://www.autonomousvehicleinternational.com/news/sensors/sim4camsens-project-receives-uk-grant-to-advance-av-perception-sensors.html

0 notes

Text

#autonomous vehicles#delivery drones#dedicated lanes#Japan#labor shortages#logistics#transportation networks#depopulated areas#Shin-Tomei Expressway#self-driving trucks#sensors#cameras#Level 4 autonomy#Road Traffic Law#highways#Chichibu#Saitama Prefecture#cargo transport#power transmission lines#inspections#digital infrastructure#study council#investment#efficient transportation#technologically advanced#revitalizing#seamless movement#goods#supplies#dynamic landscape

0 notes

Text

youtube

Meet the MOLA AUV, a multimodality, observing, low-cost, agile autonomous underwater vehicle that features advanced sensors for surveying marine ecosystems. 🤖🪸

At the core of the MOLA AUV is a commercially available Boxfish submersible, built to the CoMPAS Lab's specifications and enhanced with custom instruments and sensors developed by MBARI engineers. The MOLA AUV is equipped with a 4K camera to record high-resolution video of marine life and habitats. Sonar systems use acoustics to ensure the vehicle can consistently “see” 30 meters (100 feet) ahead and work in tandem with stereo cameras that take detailed imagery of the ocean floor.

Leveraging methods developed by the CoMPAS Lab, the vehicle’s six degrees of freedom enable it to move and rotate in any direction efficiently. This agility and portability set the MOLA AUV apart from other underwater vehicles and allow it to leverage software algorithms developed at MBARI to create three-dimensional photo reconstructions of seafloor environments.

In its first field test in the Maldives, the MOLA AUV successfully mapped coral reefs and collected crucial ocean data. With the MOLA AUV’s open-source technology, MBARI hopes to make ocean science more accessible than ever. Watch now to see MOLA in action.

Learn more about this remarkable robot on our website.

86 notes

·

View notes

Text

Greetings folks! Did somebody say fungus bots? its time to spore some trouble i guess :) ok it wasnt funny i get it.. Anyways meet with new fungus based biohybrid bot..

youtube

before i start to explain how its works lets take a look at its backstory shall we?

The idea was almost age old actually, experimentation of soft body robotics and bio robotics and today its reshape as we see biohybrid robotics with the search for more sustainable, self-healing, and biodegradable materials. Traditional robots are often made from synthetic materials and metals, which can be rigid, non-biodegradable, and challenging to repair. The researchers at Cornell University sought to overcome these limitations by integrating biological elements into robotic systems.

The team turned to mycelium, the root-like structure of fungi, which has the unique ability to grow, self-repair, and biodegrade. Mycelium is also known for its strength and flexibility, making it an ideal candidate for use in soft robotics. By embedding mycelium within a network of sensors and actuators, the researchers created a biohybrid bot capable of sensing its environment and responding to stimuli, all while being environmentally friendly.

This fungus bot represents a significant step towards more sustainable robotics, demonstrating how living organisms can be harnessed to create innovative and eco-friendly technologies. The research also opens up possibilities for robots that can grow, adapt, and repair themselves in ways that conventional robots cannot, potentially revolutionizing fields such as environmental monitoring, agriculture, and even healthcare.

there is four actual elements that actually runs this bot besides of shell.

Fungus's Mycelium

Fungus's slug

UV light or UV array in the sun light

Electricity (it seperates as fungus related electrical pulse and electricity waves from censors)

firstly lets start with fungus mycelium: Mycelia are the underground vegetative part of mushrooms, and they have a number of advantages. They can grow in harsh conditions. They also have the ability to sense chemical and biological signals and respond to multiple inputs. so basically its neural system that transfers certain commands of activities between root and fungus itself

its slug is basically fungus's cell system or actual biohybrid organism it this case

once mycelium gets affected by UV lights it generates small electricity pulses to slug system and when slugs gets electrocuted by these pulses it acts like a muscle basically and it causes the slug to move or contract its muscles to activate.

and once you figure out how you gonna shape its muscle system and house them carefully you will have a "biohybrid robot" as their terms

the reason im taking this now is it reminded me "Fungus Baby Experiments" which is an inside name for series of projects that been continued for a while after corona until now.. Simply, the goal was to create or adapt an organism to thrive in different environments and make sure these environments livable by humans in the future by manipulating with artificial and external factors. Google it :)

anyways.. thats all from me this time..

until next time..

Sources:

for fungus baby experiments:

#tech#tech news#daily news#cyberpunk#future tech#scifi tech#research#rnd#r&d#labs#neuroscience#neurotech#fungus#fungus experiments#biohybrid bot#synthetic bot#synthetic robot#Youtube

45 notes

·

View notes

Text

In a future conflict, American troops will direct the newest war machines not with sprawling control panels or sci-fi-inspired touchscreens, but controls familiar to anyone who grew up with an Xbox or PlayStation in their home.

Over the past several years, the US Defense Department has been gradually integrating what appear to be variants of the Freedom of Movement Control Unit (FMCU) handsets as the primary control units for a variety of advanced weapons systems, according to publicly available imagery published to the department’s Defense Visual Information Distribution System media hub.

Those systems include the new Navy Marine Corps Expeditionary Ship Interdiction System (NMESIS) launcher, a Joint Light Tactical Vehicle–based anti-ship missile system designed to fire the new Naval Strike Missile that’s essential to the Marine Corps’ plans for a notional future war with China in the Indo-Pacific; the Army’s new Maneuver-Short Range Air Defense (M-SHORAD) system that, bristling with FIM-92 Stinger and AGM-114 Hellfire missiles and a 30-mm chain gun mounted on a Stryker infantry fighting vehicle, is seen as a critical anti-air capability in a potential clash with Russia in Eastern Europe; the Air Force’s MRAP-based Recovery of Air Bases Denied by Ordnance (RADBO) truck that uses a laser to clear away improvised explosive devices and other unexploded munitions; and the Humvee-mounted High Energy Laser-Expeditionary (HELEX) laser weapon system currently undergoing testing by the Marine Corps.

The FMCU has also been employed on a variety of experimental unmanned vehicles, and according to a 2023 Navy contract, the system will be integral to the operation of the AN/SAY-3A Electro-Optic Sensor System (or “I-Stalker”) that’s designed to help the service’s future Constellation-class guided-missile frigates track and engage incoming threats.

Produced since 2008 by Measurement Systems Inc. (MSI), a subsidiary of British defense contractor Ultra that specializes in human-machine interfaces, the FMCU offers a similar form factor to the standard Xbox or PlayStation controller but with a ruggedized design intended to safeguard its sensitive electronics against whatever hostile environs American service members may find themselves in. A longtime developer of joysticks used on various US naval systems and aircraft, MSI has served as a subcontractor to major defense “primes” like General Atomics, Boeing, Lockheed Martin, and BAE Systems to provide the handheld control units for “various aircraft and vehicle programs,” according to information compiled by federal contracting software GovTribe.

“With the foresight to recognize the form factor that would be most accessible to today’s warfighters, [Ultra] has continued to make the FMCU one of the most highly configurable and powerful controllers available today,” according to Ultra. (The company did not respond to multiple requests for comment from WIRED.)

The endlessly customizable FMCU isn’t totally new technology: According to Ultra, the system has been in use since at least 2010 to operate the now-sundowned Navy’s MQ-8 Fire Scout unmanned autonomous helicopter and the Ground Based Operational Surveillance System (GBOSS) that the Army and Marine Corps have both employed throughout the global war on terror. But the recent proliferation of the handset across sophisticated new weapon platforms reflects a growing trend in the US military towards controls that aren’t just uniquely tactile or ergonomic in their operation, but inherently familiar to the next generation of potential warfighters before they ever even sign up to serve.

“For RADBO, the operators are generally a much younger audience,” an Air Force spokesman tells WIRED. “Therefore, utilizing a PlayStation or Xbox type of controller such as the FMCU seems to be a natural transition for the gaming generation.”

Indeed, that the US military is adopting specially built video-game-style controllers may appear unsurprising: The various service branches have long experimented with commercial off-the-shelf console handsets for operating novel systems. The Army and Marine Corps have for more than a decade used Xbox controllers to operate small unmanned vehicles, from ground units employed for explosive ordnance disposal to airborne drones, as well as larger assets like the M1075 Palletized Loading System logistics vehicle. Meanwhile, the “photonics mast” that has replaced the traditional periscope on the Navy’s new Virginia-class submarines uses the same inexpensive Xbox handset, as does the service’s Multifunctional Automated Repair System robot that’s employed on surface warships to address everything from in-theater battle damage repair to shipyard maintenance.

This trend is also prevalent among defense industry players angling for fresh Pentagon contracts: Look no further than the LOCUST Laser Weapon System developed by BlueHalo for use as the Army’s Palletized-High Energy Laser (P-HEL) system, which explicitly uses an Xbox controller to help soldiers target incoming drones and burn them out of the sky—not unlike the service’s previous ventures into laser weapons.

"By 2006, games like Halo were dominant in the military," Tom Phelps, then a product director at iRobot, told Business Insider in 2013 of the company’s adoption of a standard Xbox controller for its PackBot IED disposal robot. "So we worked with the military to socialize and standardize the concept … It was considered a very strong success, younger soldiers with a lot of gaming experience were able to adapt quickly."

Commercial video game handsets have also proven popular beyond the ranks of the US military, from the British Army’s remote-controlled Polaris MRZR all-terrain vehicle to Israel Aerospace Industries’ Carmel battle tank, the latter of which had its controls developed with feedback from teenage gamers who reportedly eschewed the traditional fighter jet-style joystick in favor of a standard video game handset. More recently, Ukrainian troops have used PlayStation controllers and Steam Decks to direct armed unmanned drones and machine gun turrets against invading Russian forces. And these controllers have unusual non-military applications as well: Most infamously, the OceanGate submarine that suffered a catastrophic implosion during a dive to the wreck of the Titanic in June 2023 was operated with a version of a Logitech F710 controller, as CBS News reported at the time.

“They are far more willing to experiment, they are much less afraid of technology … It comes to them naturally,” Israeli Defense Forces colonel Udi Tzur told The Washington Post in 2020 of optimizing the Carmel tank’s controls for younger operators. “It’s not exactly like playing Fortnite, but something like that, and amazingly they bring their skills to operational effectiveness in no time. I’ll tell you the truth, I didn’t think it could be reached so quickly.”

There are clear advantages to using cheap video-game-style controllers to operate advanced military weapons systems. The first is a matter of, well, control: Not only are video game handsets more ergonomic, but the configuration of buttons and joysticks offers tactile feedback not generally available from, say, one of the US military’s now-ubiquitous touchscreens. The Navy in particular learned this the hard way following the 2017 collision between the Arleigh Burke-class destroyer USS John S. McCain and an oil tanker off the coast of Singapore, an incident that prompted the service to swap out its bridge touchscreens for mechanical throttles across its guided-missile destroyer fleet after a National Transportation Safety Board report on the accident noted that sailors preferred the latter because “they provide[d] both immediate and tactile feedback to the operator.” Sure, a US service member may not operate an Xbox controller with a “rumble” feature, but the configuration of video-game-style controllers like the FMCU does offer significant tactile (and tactical) advantages over dynamic touchscreens, a conclusion several studies appear to reinforce.

But the real advantage of video-game-style controllers for the Pentagon is, as military officials and defense contractors have noted, their familiarity to the average US service member. As of 2024, more than 190.6 million Americans of all ages, or roughly 61 percent of the country, played video games, according to an annual report from the Entertainment Software Association trade group, while data from the Pew Research Center published in May indicates that 85 percent of American teenagers say they play video games, with 41 percent reporting that they play daily.

In terms of specific video games systems, the ESA report indicates that consoles and their distinctive controllers reign supreme among Gen Z and Gen Alpha—both demographic groups that stand to eventually end up fighting in America’s next big war. The Pentagon is, in the words of military technologist Peter W. Singer, “free-riding” off a video game industry that has spent decades training Americans on a familiar set of controls and ergonomics that, at least since the PlayStation introduced elongated grips in the 1990s, have been standard among most game systems for years (with apologies to the Wii remote that the Army eyed for bomb-disposal robots nearly two decades ago).

“The gaming companies spent millions of dollars developing an optimal, intuitive, easy-to-learn user interface, and then they went and spent years training up the user base for the US military on how to use that interface,” Singer said in a March 2023 interview. “These designs aren’t happenstance, and the same pool they’re pulling from for their customer base, the military is pulling from … and the training is basically already done.”

At the moment, it’s unclear how exactly many US military systems use the FMCU. When reached for comment, the Pentagon confirmed the use of the system on the NMESIS, M-SHORAD, and RADBO weapons platforms and referred WIRED to the individual service branches for additional details. The Marine Corps confirmed the handset’s use with the GBOSS, while the Air Force again confirmed the same for the RADBO. The Navy stated that the service does not currently use the FMCU with any existing systems; the Army did not respond to requests for comment.

How far the FMCU and its commercial off-the-shelf variants will spread throughout the ranks of the US military remains to be seen. But controls that effectively translate human inputs into machine movement tend to persist for decades after their introduction: After all, the joystick (or “control column,” in military parlance) has been a fixture of military aviation since its inception. Here’s just hoping that the Pentagon hasn’t moved on to the Power Glove by the time the next big war rolls around.

11 notes

·

View notes

Text

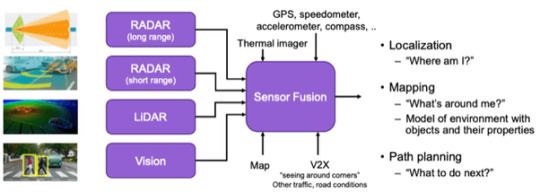

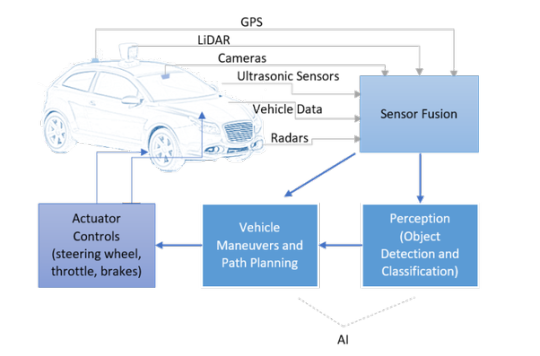

Sensor Fusion

Introduction to Sensor Fusion

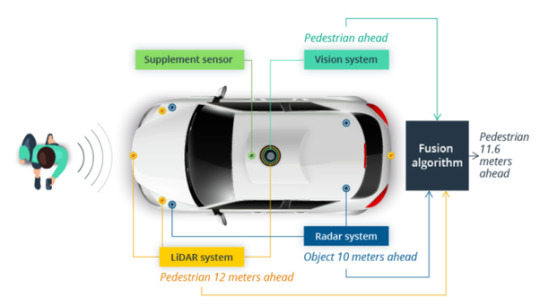

Autonomous vehicles require a number of sensors with varying parameters, ranges, and operating conditions. Cameras or vision-based sensors help in providing data for identifying certain objects on the road, but they are sensitive to weather changes.

Radar sensors perform very well in almost all types of weather but are unable to provide an accurate 3D map of the surroundings. LiDAR sensors map the surroundings of the vehicle to a high level of accuracy but are expensive.

The Need for Integrating Multiple Sensors

Thus, every sensor has a different role to play, but neither of them can be used individually in an autonomous vehicle.

If an autonomous vehicle has to make decisions similar to the human brain (or in some cases, even better than the human brain), then it needs data from multiple sources to improve accuracy and get a better understanding of the overall surroundings of the vehicle.

This is why sensor fusion becomes an essential component.

Sensor Fusion | Dorleco

Source: The Functional Components of Autonomous Vehicles

Sensor Fusion

Sensor fusion essentially means taking all data from the sensors set up around the vehicle’s body and using it to make decisions.

This mainly helps in reducing the amount of uncertainty that could be prevalent through the use of individual sensors.

Thus, sensor fusion helps in taking care of the drawbacks of every sensor and building a robust sensing system.

Most of the time, in normal driving scenarios, sensor fusion brings a lot of redundancy to the system. This means that there are multiple sensors detecting the same objects.

Sensor Fusion | Dorleco

Source: https://semiengineering.com/sensor-fusion-challenges-in-cars/

However, when one or multiple sensors fail to perform accurately, sensor fusion helps in ensuring that there are no undetected objects. For example, a camera can capture the visuals around a vehicle in ideal weather conditions.

But during dense fog or heavy rainfall, the camera won’t provide sufficient data to the system. This is where radar, and to some extent, LiDAR sensors help.

Furthermore, a radar sensor may accurately show that there is a truck in the intersection where the car is waiting at a red light.

But it may not be able to generate data from a three-dimensional point of view. This is where LiDAR is needed.

Thus, having multiple sensors detect the same object may seem unnecessary in ideal scenarios, but in edge cases such as poor weather, sensor fusion is required.

Levels of Sensor Fusion

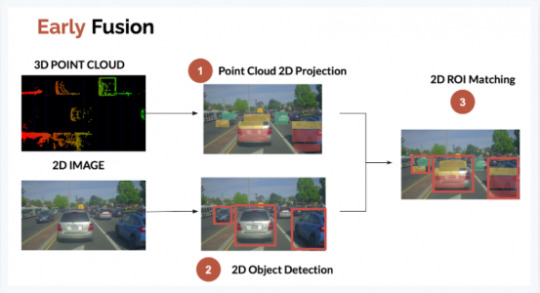

1. Low-Level Fusion (Early Fusion):

In this kind of sensor fusion method, all the data coming from all sensors is fused in a single computing unit, before we begin processing it. For example, pixels from cameras and point clouds from LiDAR sensors are fused to understand the size and shape of the object that is detected.

This method has many future applications, since it sends all the data to the computing unit. Thus, different aspects of the data can be used by different algorithms.

However, the drawback of transferring and handling such huge amounts of data is the complexity of computation. High-quality processing units are required, which will drive up the price of the hardware setup.

2. Mid-Level Fusion:

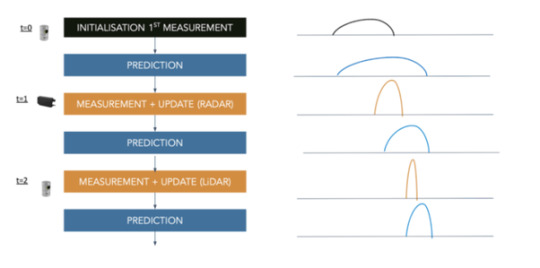

In mid-level fusion, objects are first detected by the individual sensors and then the algorithm fuses the data. Generally, a Kalman filter is used to fuse this data (which will be explained later on in this course).

The idea is to have, let’s say, a camera and a LiDAR sensor detect an obstacle individually, and then fuse the results from both to get the best estimates of position, class and velocity of the vehicle.

This is an easier process to implement, but there is a chance of the fusion process failing in case of sensor failure.

3. High-Level Fusion (Late Fusion):

This is similar to the mid-level method, except that we implement detection as well as tracking algorithms for each individual sensor, and then fuse the results.

The problem, however, would be that if the tracking for one sensor has some errors, then the entire fusion may get affected.

Sensor Fusion | Dorleco

Source: Autonomous Vehicles: The Data Problem

Sensor fusion can also be of different types. Competitive sensor fusion consists of having multiple types of sensors generating data about the same object to ensure consistency.

Complementary sensor fusion will use two sensors to paint an extended picture, something that neither of the sensors could manage individually.

Coordinated sensor fusion will improve the quality of the data. For example, taking two different perspectives of a 2D object to generate a 3D view of the same object.

Variation in the approach of sensor fusion

Radar-LiDAR Fusion

Since there are a number of sensors that work in different ways, there is no single solution to sensor fusion. If a LiDAR and radar sensor has to be fused, then the mid-level sensor fusion approach can be used. This consists of fusing the objects and then taking decisions.

Sensor Fusion | Dorleco

Source: The Way of Data: How Sensor Fusion and Data Compression Empower Autonomous Driving - Intellias

In this approach, a Kalman filter can be used. This consists of a “predict-and-update” method, where based on the current measurement and the last prediction, the predictive model gets updated to provide a better result in the next iteration. An easier understanding of this is shown in the following image.

Sensor Fusion | Dorleco

Source: Sensor Fusion

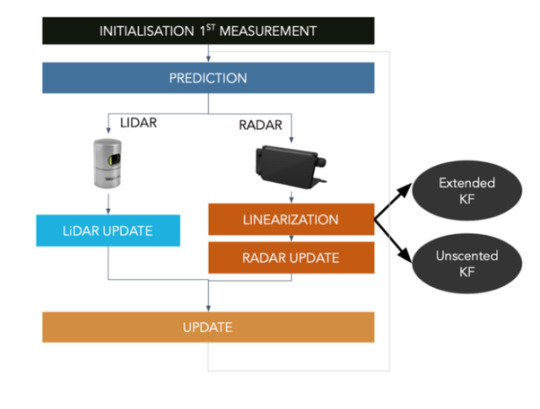

The issue with radar-LiDAR sensor fusion is that radar sensor provides non-linear data, while LiDAR data is linear in nature. Hence, the non-linear data from the radar sensor has to be linearized before it can be fused with the LiDAR data and the model then gets updated accordingly.

In order to linearize the radar data, an extended Kalman filter or unscented Kalman filter can be used.

Sensor Fusion | Dorleco

Source: Sensor Fusion

Camera-LiDAR Fusion

Now, if a system needs the fusion of camera and LiDAR sensor, then low-level (fusing raw data), as well as high-level (fusing objects and their positions) fusion, can be used. The results in both cases vary slightly.

The low-level fusion consists of overlapping the data from both sensors and using the ROI (region of interest) matching approach.

The 3D point cloud data of objects from the LiDAR sensor is projected on a 2D plane, while the images captured by the camera are used to detect the objects in front of the vehicle.

These two maps are then superimposed to check the common regions. These common regions signify the same object detected by two different sensors.

For the high-level fusion, data first gets processed, and then 2D data from the camera undergoes conversion to 3D object detection.

This data is then compared with the 3D object detection data from the LiDAR sensor, and the intersecting regions of the two sensors gives the output (IOU matching).

Sensor Fusion | Dorleco

Source: LiDAR and Camera Sensor Fusion in Self-Driving Cars

Thus, the combination of sensors has a bearing on which approach of sensor fusion needs to be used. Sensors play a massive role in providing the computer with an adequate amount of data to make the right decisions.

Furthermore, sensor fusion also allows the computer to “have a second look” at the data and filter out the noise while improving accuracy.

Autonomous Vehicle Feature Development at Dorle Controls

At Dorle Controls, developing sensor and actuator drivers is one of the many things we do. Be it bespoke feature development or full-stack software development, we can assist you.

For more info on our capabilities and how we can assist you with your software control needs, please write to [email protected].

Read more

0 notes

Text

SERVO DISTANCE INDICATOR USING ARDUINO UNO

INTRODUCTION

Distance measurement is a fundamental concept in various fields, including robotics, automation, and security systems. One common and efficient way to by emitting sound waves and calculating the time it takes for the waves to reflect back from an object, allowing accurate measurement of distance without physical contact.

In this project, we will use an HC-SR04 Ultrasonic Sensor in conjunction with an Arduino microcontroller to measure the distance between the sensor and an object. The sensor emits ultrasonic waves and measures the time it takes for the waves to return after reflecting off the object. By using the speed of sound and the time measured, the distance is calculated. This simple yet powerful setup can be applied in a variety of real-world applications such as obstacle detection in robots, parking assistance systems, and automatic door operations.

WORKING PRINCIPLE

1. Servo Movement: The servo motor rotates to different angles (0° to 180°). The ultrasonic sensor is mounted on top of the servo and moves with it.

2. Distance Measurement: At each position, the ultrasonic sensor sends out an ultrasonic pulse and waits for the echo to return after hitting an object. The Arduino records the time taken for the echo to return.

3. Distance Calculation: The Arduino calculates the distance to the object based on the time recorded and the speed of sound (0.0343 cm/µs).

4. Servo as Indicator: The servo motor's position provides a physical indication of the direction of the detected object. As the servo moves across a range of Image map out objects in different directions based on distance.

5. Visual Output: The Arduino can also send the distance and angle data to the serial monitor, creating a real-time visual representation of the detected object positions.

APPLICATIONS

1. Autonomous Robots and Vehicles

2. Radar Systems

3. Parking Assistance

4. Security Systems

5. Environmental Scanning in Drones

6. Warehouse Management and Automation

7. Industrial Automation

8. Robotic Arm Guidance

9. Collision Avoidance in UAVs/Robots

10.Interactive Displays or Art Installations

11.Smart Doors and Gates

CONCLUSION

The Servo Distance Indicator Project successfully demonstrates the integration of an ultrasonic sensor and a servo motor to create an effective distance measurement an object, the project provides real-time feedback through the movement of a servo motor, which indicates the measured distance via a visual representation.

7 notes

·

View notes

Text

What is artificial intelligence (AI)?

Imagine asking Siri about the weather, receiving a personalized Netflix recommendation, or unlocking your phone with facial recognition. These everyday conveniences are powered by Artificial Intelligence (AI), a transformative technology reshaping our world. This post delves into AI, exploring its definition, history, mechanisms, applications, ethical dilemmas, and future potential.

What is Artificial Intelligence? Definition: AI refers to machines or software designed to mimic human intelligence, performing tasks like learning, problem-solving, and decision-making. Unlike basic automation, AI adapts and improves through experience.

Brief History:

1950: Alan Turing proposes the Turing Test, questioning if machines can think.

1956: The Dartmouth Conference coins the term "Artificial Intelligence," sparking early optimism.

1970s–80s: "AI winters" due to unmet expectations, followed by resurgence in the 2000s with advances in computing and data availability.

21st Century: Breakthroughs in machine learning and neural networks drive AI into mainstream use.

How Does AI Work? AI systems process vast data to identify patterns and make decisions. Key components include:

Machine Learning (ML): A subset where algorithms learn from data.

Supervised Learning: Uses labeled data (e.g., spam detection).

Unsupervised Learning: Finds patterns in unlabeled data (e.g., customer segmentation).

Reinforcement Learning: Learns via trial and error (e.g., AlphaGo).

Neural Networks & Deep Learning: Inspired by the human brain, these layered algorithms excel in tasks like image recognition.

Big Data & GPUs: Massive datasets and powerful processors enable training complex models.

Types of AI

Narrow AI: Specialized in one task (e.g., Alexa, chess engines).

General AI: Hypothetical, human-like adaptability (not yet realized).

Superintelligence: A speculative future AI surpassing human intellect.

Other Classifications:

Reactive Machines: Respond to inputs without memory (e.g., IBM’s Deep Blue).

Limited Memory: Uses past data (e.g., self-driving cars).

Theory of Mind: Understands emotions (in research).

Self-Aware: Conscious AI (purely theoretical).

Applications of AI

Healthcare: Diagnosing diseases via imaging, accelerating drug discovery.

Finance: Detecting fraud, algorithmic trading, and robo-advisors.

Retail: Personalized recommendations, inventory management.

Manufacturing: Predictive maintenance using IoT sensors.

Entertainment: AI-generated music, art, and deepfake technology.

Autonomous Systems: Self-driving cars (Tesla, Waymo), delivery drones.

Ethical Considerations

Bias & Fairness: Biased training data can lead to discriminatory outcomes (e.g., facial recognition errors in darker skin tones).

Privacy: Concerns over data collection by smart devices and surveillance systems.

Job Displacement: Automation risks certain roles but may create new industries.

Accountability: Determining liability for AI errors (e.g., autonomous vehicle accidents).

The Future of AI

Integration: Smarter personal assistants, seamless human-AI collaboration.

Advancements: Improved natural language processing (e.g., ChatGPT), climate change solutions (optimizing energy grids).

Regulation: Growing need for ethical guidelines and governance frameworks.

Conclusion AI holds immense potential to revolutionize industries, enhance efficiency, and solve global challenges. However, balancing innovation with ethical stewardship is crucial. By fostering responsible development, society can harness AI’s benefits while mitigating risks.

2 notes

·

View notes

Text

Chemists develop highly reflective black paint to make objects more visible to autonomous cars

Driving at night might be a scary challenge for a new driver, but with hours of practice it soon becomes second nature. For self-driving cars, however, practice may not be enough because the lidar sensors that often act as these vehicles' "eyes" have difficulty detecting dark-colored objects. New research published in ACS Applied Materials & Interfaces describes a highly reflective black paint that could help these cars see dark objects and make autonomous driving safer. Lidar, short for light detection and ranging, is a system used in a variety of applications, including geologic mapping and self-driving vehicles. The system works like echolocation, but instead of emitting sound waves, lidar emits tiny pulses of near-infrared light. The light pulses bounce off objects and back to the sensor, allowing the system to map the 3D environment it's in.

Read more.

12 notes

·

View notes

Photo

Mobility solutions AAVI learns how shared intelligent transportation has the potential to enrich communities and solve driver shortages, with autonomous bus and shuttle services in Europe and the US providing valuable lessons Surprisingly few people are aware that automated public transportation in the form of autonomous buses and shuttles is already a reality, with fleets of driverless vehicles in daily service in both the US and Europe. Adastec Corp is currently operating a full-size (22-seat), electric SAE Level 4-capable automated transit bus on a circular route around Michigan State University’s campus in East Lansing, Michigan. “We have been making 10 daily trips between an outer commuter lot and the auditorium building within the campus, carrying students and faculty for more than one year,” explains Dr Kerem Par, CTO and co-founder of Adastec Corp. Continue reading Mobility solutions at ADAS & Autonomous Vehicle International. https://www.autonomousvehicleinternational.com/features/mobility-solutions-2.html

#AI & Sensor Fusion#Features#Mapping#Mobility solutions#Sensors#V2X#Anthony James#ADAS & Autonomous Vehicle International

0 notes

Text

What Is Generative Physical AI? Why It Is Important?

What is Physical AI?

Autonomous robots can see, comprehend, and carry out intricate tasks in the actual (physical) environment with to physical artificial intelligence. Because of its capacity to produce ideas and actions to carry out, it is also sometimes referred to as “Generative physical AI.”

How Does Physical AI Work?

Models of generative AI Massive volumes of text and picture data, mostly from the Internet, are used to train huge language models like GPT and Llama. Although these AIs are very good at creating human language and abstract ideas, their understanding of the physical world and its laws is still somewhat restricted.

Current generative AI is expanded by Generative physical AI, which comprehends the spatial linkages and physical behavior of the three-dimensional environment in which the all inhabit. During the AI training process, this is accomplished by supplying extra data that includes details about the spatial connections and physical laws of the actual world.

Highly realistic computer simulations are used to create the 3D training data, which doubles as an AI training ground and data source.

A digital doppelganger of a location, such a factory, is the first step in physically-based data creation. Sensors and self-governing devices, such as robots, are introduced into this virtual environment. The sensors record different interactions, such as rigid body dynamics like movement and collisions or how light interacts in an environment, and simulations that replicate real-world situations are run.

What Function Does Reinforcement Learning Serve in Physical AI?

Reinforcement learning trains autonomous robots to perform in the real world by teaching them skills in a simulated environment. Through hundreds or even millions of trial-and-error, it enables self-governing robots to acquire abilities in a safe and efficient manner.

By rewarding a physical AI model for doing desirable activities in the simulation, this learning approach helps the model continually adapt and become better. Autonomous robots gradually learn to respond correctly to novel circumstances and unanticipated obstacles via repeated reinforcement learning, readying them for real-world operations.

An autonomous machine may eventually acquire complex fine motor abilities required for practical tasks like packing boxes neatly, assisting in the construction of automobiles, or independently navigating settings.

Why is Physical AI Important?

Autonomous robots used to be unable to detect and comprehend their surroundings. However, Generative physical AI enables the construction and training of robots that can naturally interact with and adapt to their real-world environment.

Teams require strong, physics-based simulations that provide a secure, regulated setting for training autonomous machines in order to develop physical AI. This improves accessibility and utility in real-world applications by facilitating more natural interactions between people and machines, in addition to increasing the efficiency and accuracy of robots in carrying out complicated tasks.

Every business will undergo a transformation as Generative physical AI opens up new possibilities. For instance:

Robots: With physical AI, robots show notable improvements in their operating skills in a range of environments.

Using direct input from onboard sensors, autonomous mobile robots (AMRs) in warehouses are able to traverse complicated settings and avoid impediments, including people.

Depending on how an item is positioned on a conveyor belt, manipulators may modify their grabbing position and strength, demonstrating both fine and gross motor abilities according to the object type.

This method helps surgical robots learn complex activities like stitching and threading needles, demonstrating the accuracy and versatility of Generative physical AI in teaching robots for particular tasks.

Autonomous Vehicles (AVs): AVs can make wise judgments in a variety of settings, from wide highways to metropolitan cityscapes, by using sensors to sense and comprehend their environment. By exposing AVs to physical AI, they may better identify people, react to traffic or weather, and change lanes on their own, efficiently adjusting to a variety of unforeseen situations.

Smart Spaces: Large interior areas like factories and warehouses, where everyday operations include a constant flow of people, cars, and robots, are becoming safer and more functional with to physical artificial intelligence. By monitoring several things and actions inside these areas, teams may improve dynamic route planning and maximize operational efficiency with the use of fixed cameras and sophisticated computer vision models. Additionally, they effectively see and comprehend large-scale, complicated settings, putting human safety first.

How Can You Get Started With Physical AI?

Using Generative physical AI to create the next generation of autonomous devices requires a coordinated effort from many specialized computers:

Construct a virtual 3D environment: A high-fidelity, physically based virtual environment is needed to reflect the actual world and provide synthetic data essential for training physical AI. In order to create these 3D worlds, developers can simply include RTX rendering and Universal Scene Description (OpenUSD) into their current software tools and simulation processes using the NVIDIA Omniverse platform of APIs, SDKs, and services.

NVIDIA OVX systems support this environment: Large-scale sceneries or data that are required for simulation or model training are also captured in this stage. fVDB, an extension of PyTorch that enables deep learning operations on large-scale 3D data, is a significant technical advancement that has made it possible for effective AI model training and inference with rich 3D datasets. It effectively represents features.

Create synthetic data: Custom synthetic data generation (SDG) pipelines may be constructed using the Omniverse Replicator SDK. Domain randomization is one of Replicator’s built-in features that lets you change a lot of the physical aspects of a 3D simulation, including lighting, position, size, texture, materials, and much more. The resulting pictures may also be further enhanced by using diffusion models with ControlNet.

Train and validate: In addition to pretrained computer vision models available on NVIDIA NGC, the NVIDIA DGX platform, a fully integrated hardware and software AI platform, may be utilized with physically based data to train or fine-tune AI models using frameworks like TensorFlow, PyTorch, or NVIDIA TAO. After training, reference apps such as NVIDIA Isaac Sim may be used to test the model and its software stack in simulation. Additionally, developers may use open-source frameworks like Isaac Lab to use reinforcement learning to improve the robot’s abilities.

In order to power a physical autonomous machine, such a humanoid robot or industrial automation system, the optimized stack may now be installed on the NVIDIA Jetson Orin and, eventually, the next-generation Jetson Thor robotics supercomputer.

Read more on govindhtech.com

#GenerativePhysicalAI#generativeAI#languagemodels#PyTorch#NVIDIAOmniverse#AImodel#artificialintelligence#NVIDIADGX#TensorFlow#AI#technology#technews#news#govindhtech

3 notes

·

View notes